Why experiments are stupid but we need more of them

Most of us are excited about social learning in the real world. How do the dolphins of Shark Bay learn to fish with sponges, do chimpanzee mothers teach their young how to crack open nuts, can starting moves in the board game Go or formations in football really be said to “evolve” – and what has culture to do with our uniquely human growth and brainy heads? These questions are fun to pose but notoriously hard to answer. Social learning research typically deals with complex, dynamic systems spanning individual psychological processes and large-scale population patterns, all interacting in highly non-linear ways. In contrast to this staggering complexity, experiments, especially those conducted in the lab, are gross simplifications of reality. Abstracting away from most real-world detail, they result in a highly idealized version of the phenomena they aim to represent. Good experiments, however, also facilitate causal inference, lay bare the fundamental structure of an otherwise overly complex system, and can elicit behavioral strategies that may be impossible to observe in naturalistic settings. Well-designed experiments thus resemble theoretical models, in that their simplification is a critical design feature, rather than a bug. If the power of experiments lies in their artificiality, we should maybe aim less for “realism” when designing them.

Experiments and causal inference

“Correlation does not imply causation”. Like a mantra, this sentence is thrown at us, during introductory stats courses and academic conferences, typically followed by a demand that experiments might resolve the issue. So why exactly are experiments helpful here and are they really a causal panacea? X causes Y when intervening on X changes what we expect to see in Y. Formally, P(Y=y|do(X=x)) denotes the probability (or frequency) that event Y=y would occur if treatment condition X=x were enforced. Unfortunately, we cannot directly observe this – all we get from empirical data and statistical models is the probability of seeing Y conditional on observing X, P(Y=y|X=x). As noted by philosophers David Hume and Immanuel Kant, causal inference is never purely empirical, but necessarily rests on additional causal assumptions projected onto the data. The question then becomes how well causal assumptions can be justified; and that is where experiments enter the stage. To get an unbiased estimate of the causal effect of X on Y, we need to eliminate the effect of other variables that might influence both X and Y, thus generating a spurious correlation between the two. However, coming up with potential confounding factors is often only limited by our imagination, and randomly picking some handy covariates to throw into a regression usually does more harm than good (a dreaded “collider bias” is always lurking in the dark). Therefore, making causal inference from observational data is hard, and may be impossible, even, in most cross-sectional studies.

Instead of measuring and statistically accounting (“controlling”) for potential confounds, experiments take a different approach. In experimental research, we aim to close ALL potential “backdoor paths” by directly setting X, the independent or explanatory variable, to a particular value. Because we as experimenters directly assigned X, we can ensure (at least in an ideal world) that a resulting statistical association between X and Y is not caused by any observed or unobserved third variable. All variation in X is due to us, so there is no potential for any other cause. If this sounds too good to be true, maybe it is. There is nothing magical about experiments. All inferential power rests on the assumption that we manage to set the causal variable X (and nothing else!) through our experimental manipulation. However, this is not straightforward, as we are usually only able to manipulate some proxy of the actual explanatory variable. The theoretically relevant variable itself, the true X, often cannot be directly manipulated (e.g., we do not know if a patient took a drug as prescribed or if a participant really attended to a stimulus in the anticipated way) or may not be directly observable, and is instead a theoretical construct (e.g., “intelligence” or “effective population size”).

Understanding (cultural) evolutionary forces, one at a time

Beyond their general causal power, experiments offer inferential advantages that apply to social learning research more specifically. Formal social learning theory is inherently dynamical, describing quantitative changes in traits or behavior over time depending on a number of interacting forces. Such forces include demography, network dynamics and social learning strategies, i.e. the rules that govern how information is passed on between individuals. Experiments allow us to keep some of these forces constant, while observing cultural dynamics generated by a smaller, thus more manageable, set of factors. Synthesizing the results of several related experiments, each focusing on different moving parts, offers a deeper understanding of a system that may be too complex to grasp otherwise.

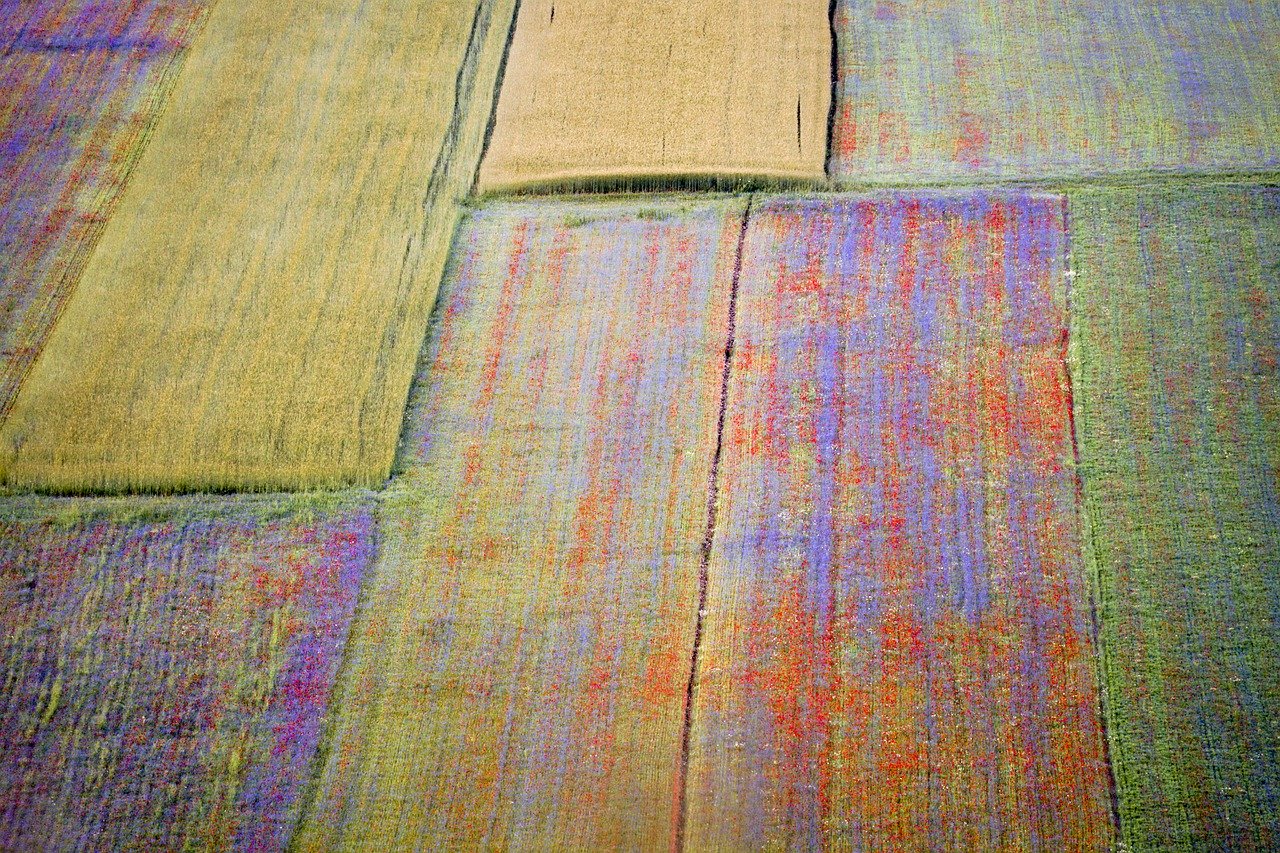

This is well illustrated by an example from my own PhD research. Previous human lab experiments have let fixed groups of individuals engage in repeated rounds of a learning task, and then analyzed how individuals’ choices are affected by the payoffs they received and the choices of other members of their group (e.g. McElreath et al., 2008; Toyokawa et al., 2019). These experiments taught us how humans combine individual and social information strategically, and what consequences such strategies can have for population-level cultural patterns. We replicated the basic structure of previous experiments but included critical new forces in addition to learning psychology: migration and demography. We found systematic differences in learning after spatial (migration) compared to temporal environmental changes, as predicted by mathematical modeling. We also analyzed how adaptive learning dynamics unfolded over time after migration into another region, shedding new light on the psychological processes underlying acculturation and cultural diversity. Admittedly, this experiment is still stupidly simple and lacks many factors critical in nature. For complex systems, there cannot be a single perfectly realistic experimental model to rule them all. Instead, as with theoretical modeling, using simplifying assumptions is a clever choice, rather than a sign of defeat. It may be the only way to make progress and apply rules of logic to a chaotic reality.

Dynamic data for dynamic theory

Another major advantage of experimental work in cultural evolution lies in potentially higher data quality, which allows a more direct test of theoretically relevant parameters. Making reliable inferences about cultural evolutionary forces generally requires detailed time-series data from consecutive cultural transmission events, which cannot really be documented outside the realm of controlled experiments. Experimentally generating fine-grained time-series data, we can apply formal evolutionary theory or learning models to data, allowing more direct assessment of relevant theory compared to simple group comparisons. Despite a wealth of formal social learning theory, that allows a direct quantitative test of relevant generative mechanisms, many experimenters test only qualitative predictions about rates of behavior in different conditions. This constitutes a problem, because while we are typically more interested in the underlying learning strategies than the overt behavior itself, many different processes can result in the same empirical pattern (called the “inverse problem”). In addition, this gap between theory and experimental design can make it difficult to assess the implications of empirical results for theoretical predictions.

To infer actual learning strategies from behavior, we can fit, for instance, experience-weighted attraction (EWA) models that link individual (reinforcement-learning) updating rules and social information to population-level cultural dynamics. These models can also be used to study learning psychology in less artificial settings (Barrett et al., 2017; Aplin at al., 2017). Still, our inferential powers are greatly enhanced under experimental treatment, i.e. knowing who exactly was observed at what time and what payoff resulted from a given choice. Therefore, by replicating the structure of theoretical models, experiments can provide a better bridge between hypothesized theoretical mechanisms and their empirical tests. So-called “experimental evolutionary simulations” extend this approach by using data generated by real participants as input for computer simulations of evolutionary dynamics (e.g. Morgan et al., 2020). A large caveat is that (cultural) evolutionary models usually describe changes in a population occurring over many generations, while dynamics in the lab typically unfold in less than an hour. It is often not explicit in the experimental literature whether researchers (me included) believe we are measuring mechanisms/strategies that evolved outside the lab (through genetic or cultural evolution), or whether we assume these strategies evolve through learning dynamics or rational decision making within the experiment. We need to be clearer on this.

Experiments and observation joining forces

All this cheering for controlled experiments is, of course, not meant to cast any doubt on the central importance of long-term observational field data. Cultural evolution unfolds in real-world ecological and social contexts, which must be the ultimate goal of our understanding. Experiments, however, do provide information on individual-level tendencies necessary to understand those larger-scale dynamics. Behavior in real-world social situations is a complex product of personal psychological preferences, the local environment and a dense web of societal norms and expectations that constrain individual behavior and choices. Anthropologists, for instance, have analyzed food-sharing and helping networks in small-scale societies to test hypotheses about the evolution of cooperation. Such observational data alone, however crucial, do not let us infer personal preferences, because decisions on who to give food or money are not only influenced by what individuals want but also by social norms, opportunities and power. Experimental economic games thus nicely complement the story told by real social networks, demonstrating how individuals would behave “if no one was looking”. Minimizing real-world constraints, they reveal participants’ private preferences in a way that observational and interview data may not (Pisor et al., 2020).

Similar to theoretical models, experiments are useful precisely BECAUSE they are unrealistic (Smaldino, 2017). The real world is complicated and replacing a complex reality with a slightly less complex, but still incomprehensible, model or experiment does not help much either. Experiments are most useful when they allow direct inferences about relevant theoretical parameters. Therefore, we experimenters should not aim to match a messy reality but the structure of relevant theory.

References

Aplin, L. M., Sheldon, B. C., & McElreath, R. (2017). Conformity does not perpetuate suboptimal traditions in a wild population of songbirds. Proceedings of the National Academy of Sciences, 114(30), 7830-7837.

Barrett, B. J., McElreath, R. L., & Perry, S. E. (2017). Pay-off-biased social learning underlies the diffusion of novel extractive foraging traditions in a wild primate. Proceedings of the Royal Society B: Biological Sciences, 284(1856), 20170358.

McElreath, R., Bell, A. V., Efferson, C., Lubell, M., Richerson, P. J., & Waring, T. (2008). Beyond existence and aiming outside the laboratory: estimating frequency-dependent and pay-off-biased social learning strategies. Philosophical Transactions of the Royal Society B: Biological Sciences, 363(1509), 3515-3528.

Morgan, T. J., Suchow, J. W., & Griffiths, T. L. (2020). Experimental evolutionary simulations of learning, memory and life history. Philosophical Transactions of the Royal Society B, 375(1803), 20190504.

Pisor, A. C., Gervais, M. M., Purzycki, B. G., & Ross, C. T. (2019). Preferences and constraints: the value of economic games for studying human behaviour. Royal Society Open Science, 7(6), 192090.

Smaldino, P. E. (2017). Models are stupid, and we need more of them. Computational social psychology, 311-331.

Toyokawa, W., Whalen, A., & Laland, K. N. (2019). Social learning strategies regulate the wisdom and madness of interactive crowds. Nature Human Behaviour, 3(2), 183-193.